Magazine: Features

Interfaces on the go

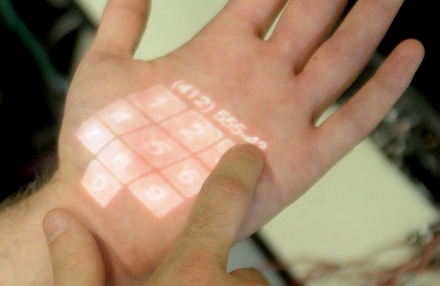

Enabling mobile micro-interactions with physiological computing.

Interfaces on the go

Full text also available in the ACM Digital Library as PDF | HTML | Digital Edition

Thank you for your interest in this article. This content is protected. You may log in with your ACM account or subscribe to access the full text.

Pointers

The ACM Special Interest Group on Computer Human Interaction (SIGCHI) keeps a handy calendar of events related to HCI

http://www.sigchi.org/conferences/calendarofevents.html

Jargon

Graphical User Interface

Human-Computer Interaction: a subfield of computer science

A usually flat surface that can detect multiple finger gestures, popularized by the iPhone, and a common component of tangible user interfaces

Tangible User Interface

Windows, Icons, Menus, Pointers, the typical way we interact with a GUI

What You See is More or Less What You Get